EagleView: A Video Analysis Tool for Visualising and Querying Spatial Interactions of People and Devices

in ACM International Conference on Interactive Surfaces and Spaces (ACM ISS'18)

November 25 to 28 in Tokyo, Japan. Best Paper Honourable Mention Award

Best Paper Honourable Mention Award

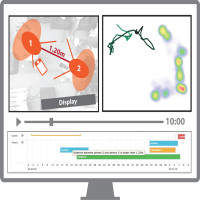

To study and understand group collaborations involving multiple handheld devices and large interactive displays, researchers frequently analyse video recordings of interaction studies to interpret people’s interactions with each other and/or devices. Advances in ubicomp technologies allow researchers to record spatial information through sensors in addition to video material. However, the volume of video data and high number of coding parameters involved in such an interaction analysis makes this a time-consuming and labour-intensive process. We designed EagleView, which provides analysts with real-time visualisations during playback of videos and an accompanying data-stream of tracked interactions. Real-time visualisations take into account key proxemic dimensions, such as distance and orientation. Overview visualisations show people’s position and movement over longer periods of time. EagleView also allows the user to query people’s interactions with an easy-to-use visual interface. Results are highlighted on the video player’s timeline, enabling quick review of relevant instances. Our evaluation with expert users showed that EagleView is easy to learn and use, and the visualisations allow analysts to gain insights into collaborative activities.

Video Figure:

Watch my talk at ISS in November 2018 in Tokyo: