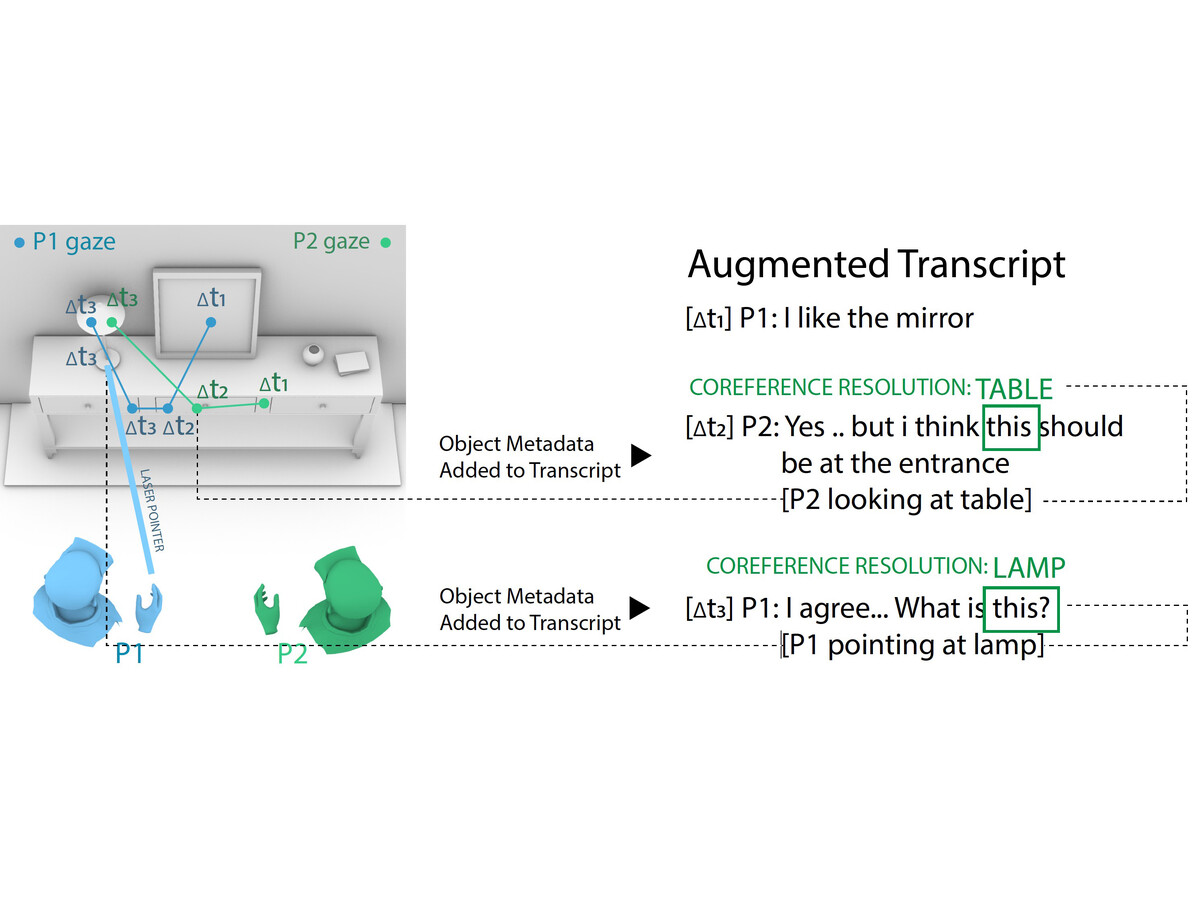

tl;dr: A system that augments VR speech transcripts with textual descriptions of ambiguous references to objects (such as "it" or "there").

Understanding transcripts of immersive multimodal conversations is challenging because speakers frequently rely on visual context and non-verbal cues, such as gestures and visual attention, which are not captured in speech alone. This lack of information makes coreferences resolution-the task of linking ambiguous expressions like “it” or “there” to their intended referents-particularly challeng- ing. In this paper we present a system that augments VR speech transcript with eye-tracking laser pointing data, and scene meta- data to generate textual descriptions of non-verbal communication and the corresponding objects of interest. To evaluate the system, we collected gaze, gesture, and voice data from 12 participants (6 pairs) engaged in an open-ended design critique of a 3D model of an apartment. Our results show a 26.5% improvement in coreference resolution accuracy by a GPT model when using our multimodal transcript compared to a speech-only baseline.